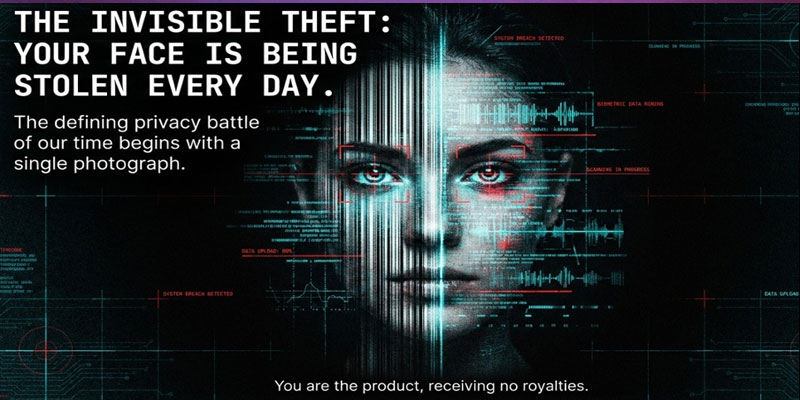

By 2026, as AI-generated deepfakes increasingly target facial recognition systems, 30% of enterprises will no longer consider traditional biometric identity verification reliable on its own, according to a report from Gartner, Inc.

“AI has evolved to a point where synthetic facial images—deepfakes—can convincingly replicate real people,” said Akif Khan, VP Analyst at Gartner. “This allows attackers to bypass or manipulate biometric authentication, making it nearly impossible to distinguish between genuine and artificial identities.”

Most biometric systems today rely on Presentation Attack Detection (PAD) to assess user liveness. However, current PAD frameworks fail to address digital injection attacks—a new class of threats using AI-generated video or image inputs to deceive verification systems. Gartner noted that such injection attacks rose 200% in 2023, highlighting a rapidly growing security gap.

To mitigate these risks, Gartner recommends enterprises adopt multilayered defenses, integrating Injection Attack Detection (IAD) and image inspection tools capable of verifying human presence and detecting synthetic content.

Organizations should also leverage device identification, behavioral analytics, and continuous monitoring to enhance security resilience. Khan urged companies to collaborate with vendors investing in counter-deepfake technologies and to redefine trust baselines.

As deepfakes blur the boundary between real and artificial, Gartner warns that the future of digital identity will depend on evolving authentication systems that can verify not just users—but humanity itself.